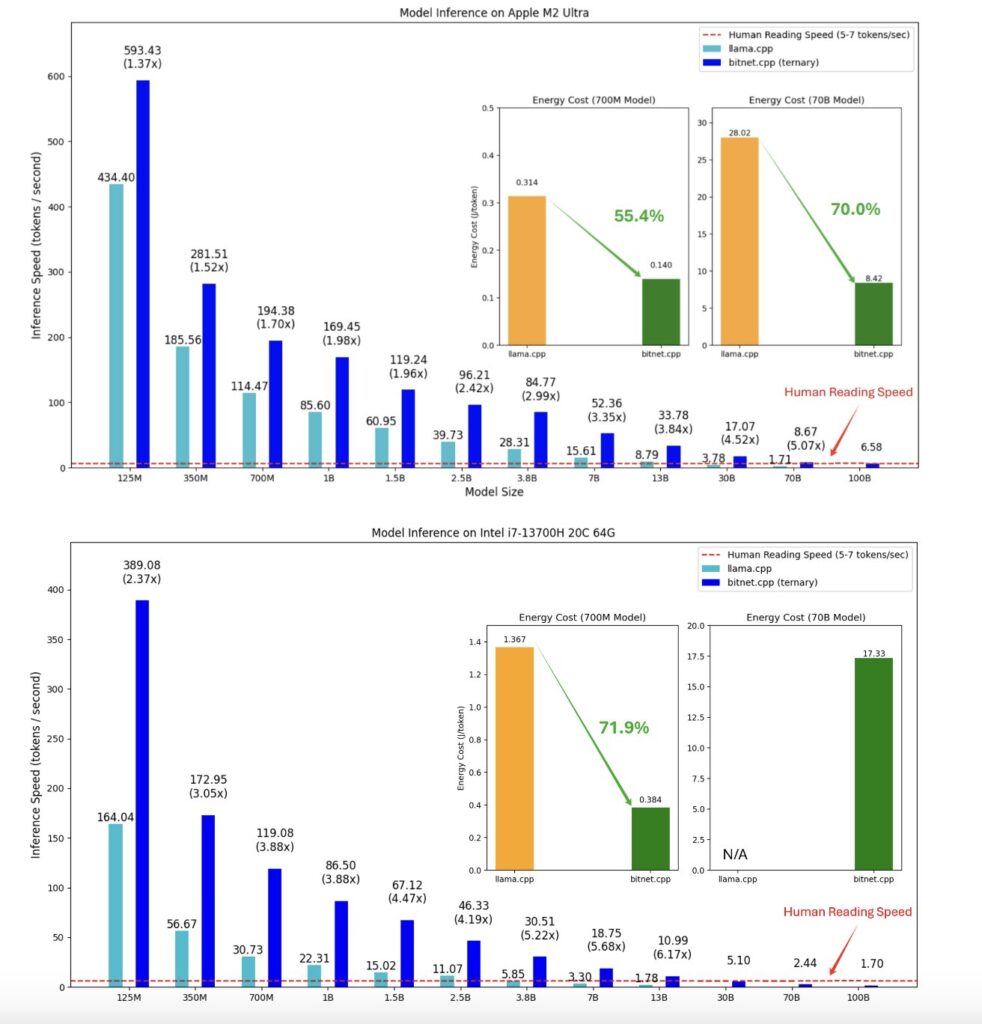

Huge Large Language Models (LLMs) running on GPUs consumes high energy and affects the environment. Aim of Artificial Intelligence (AI) advancement is to help the human race in daily life but also preserve the mother earth environment. In a bid to address these concerns, Microsoft Research introduced BitNet, a 1-bit Transformer architecture specifically designed for large-scale language models. This novel approach seeks to reduce the resource demands of training and deploying these models while maintaining competitive performance compared to state-of-the-art 8-bit quantization methods and FP16 Transformer baselines.

BitNet operates by introducing a 1-bit Transformer architecture, optimized for large language models. The core innovation is the BitLinear layer, which replaces traditional floating-point matrix multiplication with 1-bit weights, drastically reducing the model’s memory footprint and computational requirements. BitNet quantizes model weights to binary (+1 or -1) using the signum function and applies absmax quantization for activations, ensuring that the model operates efficiently with low-precision arithmetic. During training, BitNet leverages techniques like LayerNorm and straight-through estimators (STE) to maintain gradient flow and training stability despite the reduced precision. Moreover, it incorporates group quantization and normalization to enable efficient model parallelism, allowing scalable performance on large models while minimizing inter-device communication overhead. This architecture yields significant reductions in energy consumption and memory usage while maintaining performance on par with full-precision models.

BitNet represents a significant advancement in optimizing large language models through 1-bit quantization, reducing memory usage, energy consumption, and computational costs compared to FP16 Transformers. Its innovative features, like BitLinear and absmax quantization, maintain performance while improving efficiency, enabling scalable deployment on commodity hardware. Now open-source, BitNet offers a promising solution for real-world AI applications, balancing high performance with reduced resource requirements.

Open source projects like these will propel AI development in a positive manner. This benefits the community and create a harmonous culture where anyone can contribute in developing the future of dreams.

Reference:

Hongyu Wang et al. “BitNet: Scaling 1-bit Transformers for Large Language Models” Microsoft 2024